Software projects have critical stages, such as the requirements definition or the development process, and we know that the final results depend on the discipline with which we manage to execute these steps. However, there is a critical stage that often gets overlooked or doesn’t get the attention it needs, which is the architecture definition. It can define how smooth the development and release process will be, and therefore the overall success of the project.

Each project is unique, which makes the considerations and process involved in choosing the architecture particularly important. Variables such as the language, the framework, the number of users and the nature of the executed tasks affect the selection of the resources and tools that will perform best for a given implementation.

A real-world use case describes an application that centralizes information from multiple APIs and stores high security information related to the users in different databases. The architecture must ensure that, over time, more integrations can be added in order to extend the functionality of the tool without affecting performance or disrupting the release process. It is in this area that a microservice architecture appears as the ideal option.

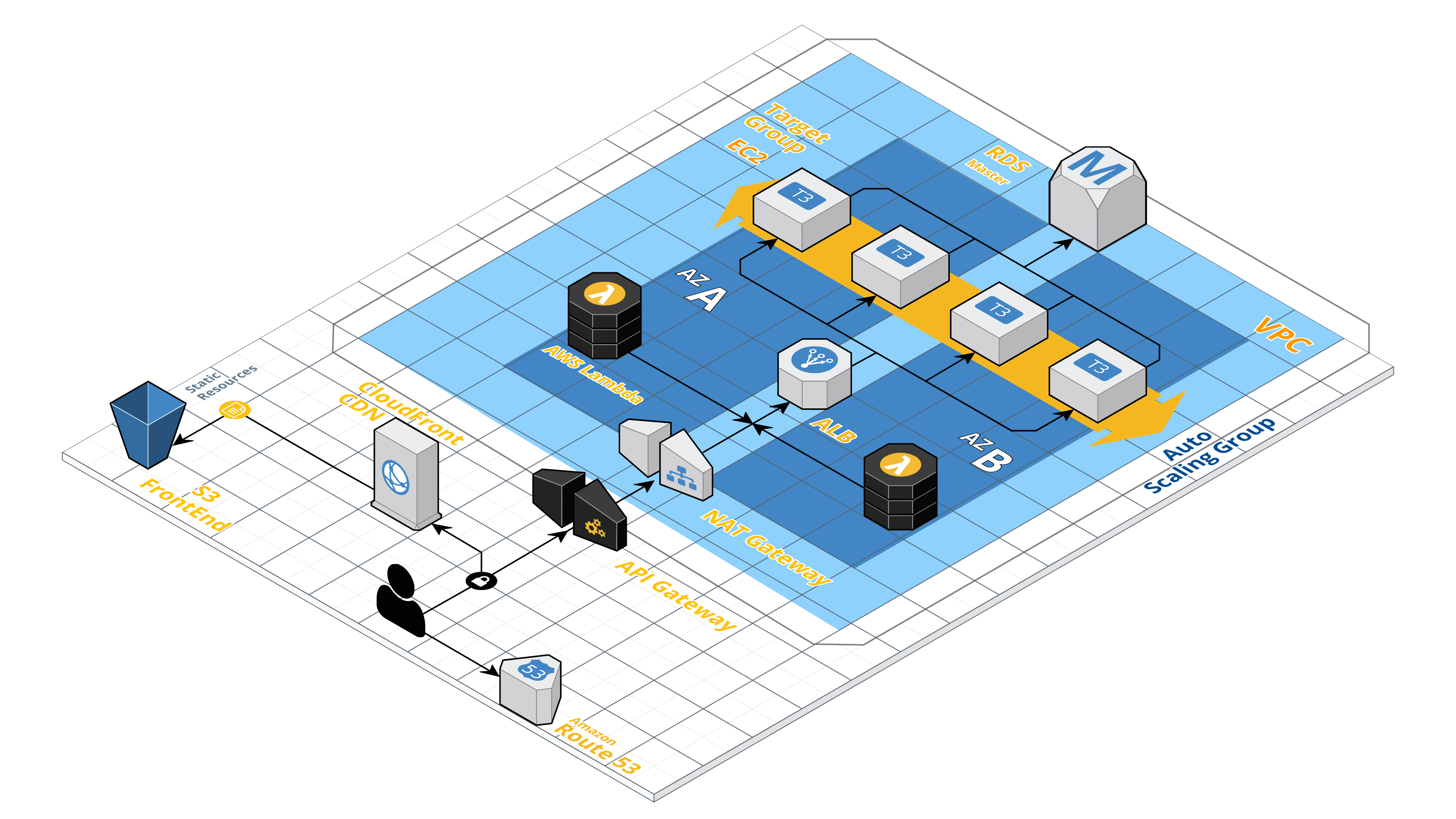

Thanks to Amazon Web Services (AWS) and its services Elastic Cloud Computing (EC2), Elastic Container Service (ECS) and Elastic Container Register (ECR), it is possible to create a reliable and maintainable infrastructure, which provides sufficient versatility and security supported by the Virtual Private Cloud (VPC) service. The following diagram describes an architecture capable of supporting multiple microservices thanks to the low resource consumption of the Micro.js framework and the serverless availability of the AWS Lambda.

The following characteristics stand out:

- Micro.js is a very light framework that is characterized by not consuming a high amount of resources (the whole project is 260 lines). It achieves this thanks to its single purpose modules orientation and its ability to keep in sync and await availability for development. It is optimized for its deployment on containers and the GraphQL implementation.

- AWS Lambda provides routing and maintains communication between the client and the microservices. The API Gateway service keeps the API available, it is an AWS service enabled for monitoring, documentation and management of APIs.

- EC2 instances, synchronized with ECS agents, keep microservices available in containers. The traffic on these is balanced thanks to an Application Load Balancer (ALB) that manages an Auto Scaling Group (ASG) in AWS. In this way, high availability is achieved, and it is guaranteed that the backend can respond to traffic variations automatically.

- All Lambda functions and EC2 resources are housed in a private VPC, which is only accessible through a NAT Gateway. This practice allows to guarantee an additional layer of security that aligns with the nature of this application.

- All the continuous integration and delivery (CI / CD) is automated through a Jenkins CI server that is in constant communication with the ECR, ECS and AWS Lambda services, keeping them updated with the code that is centralized in a single repository. Each route of this project is the trigger of a chain of steps that are constantly adding new features.

- For the monitoring of resources, AWS CloudWatch reports in real time the status of Lambda functions, ECS containers and others like the ALB and ASG. The Papertrail service is implemented to obtain and filter detailed log information of each container.

The key factor of this architecture is that it allows for the adding of new microservices as they are required, without the consumption of resources (CPU/RAM) being a factor to worry about. This reduces the pressure on the development team, which will have the capacity to add new functionalities that have a completely different approach, integrations and scope to those that exist already.

Additionally, this design takes the term Auto Scaling to another level, since it separates in smaller steps the execution of tasks, reducing its load and taking advantage of the serverless technology of AWS Lambda, together with the intrinsic Auto Scaling capability of AWS ECS. This technology allows us to have a scalable infrastructure at almost any level of traffic from the beginning and with a non-negligible plus: low starting cost.

The team at Ballast Lane Applications can implement this methodology as is, or tweak it as needed to serve the unique needs of its clients.