AI is now embedded in nearly every software product and software development. What separates top (engineering) organizations from the rest is no longer whether they use AI, but how they use it. Many teams have adopted AI tools, but without correct standards, verified competency, or clear accountability, AI adoption becomes inconsistent and risky. AI can generate code at unprecedented speed, but it cannot be accountable for uptime, data privacy, architectural integrity, or long-term system health. Productivity may increase; however, quality, security, and long-term maintainability suffer.

At Ballast Lane Applications, we recognized that AI delivers its real value only when it is applied with intention and structure. Our job is to provide engineers with the tools, standards, and training to do that work even better. Our response is: a 100% AI-enabled engineering organization, built on accountability rather than hype. We are not replacing engineers. We are increasing their judgment.

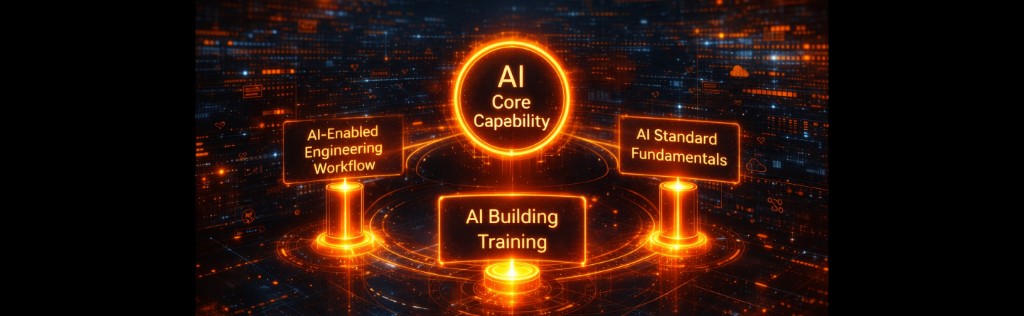

To make AI capability measurable, repeatable, and scalable, we built a three-pillar integration model.

Our Three-Pillar AI Integration Model

At BLA, AI is a core capability of modern software delivery, not a side experiment or innovation initiative. Because of this, our approach follows a "Build to Last" philosophy: AI supports experienced engineers and improves outcomes, while disciplined engineering practices ensure the delivery of value, reliability, and security.

We operationalize this philosophy through three interconnected pillars: AI-Enabled Engineering Workflow, AI Standard Fundamentals, and AI Building Training.

Pillar #1: AI-Enabled Engineering Workflow

When AI is adopted inconsistently across teams, outcomes become unpredictable and quality varies. At Ballast Lane, we treat AI as part of our engineering system rather than an individual preference. We standardize the use of modern LLM-native development tools such as Cursor, Claude Code, and Windsurf, and integrate them into our delivery workflow with clear expectations and governance. The goal is not uniformity for its own sake, but repeatable quality, accountability, and the ability for teams to collaborate, review, and continuously improve together.

Our AI-first engineering workflow follows a three-layer approach:

- At the organization level, we define shared standards for how AI is used in software development, including expectations for prompt quality, context management via specialization layers, review loops, and ownership of outcomes.

- At the technology practice level, teams establish patterns that fit their domain, such as agent-based workflows, reusable context layers, and task-specific AI usage, while remaining aligned with company standards.

- At the individual level, engineers retain autonomy to choose the best approach for a given problem, but are accountable for understanding, reviewing, and standing behind any AI-assisted output. AI proposes; engineers decide.

We reinforce this model through disciplined execution practices, such as the following:

- Engineers treat context management as a core skill, ensuring AI interactions are grounded in real architectural constraints, coding standards, security requirements, and performance goals.

- Where appropriate, agent-based subroutines are used to handle well-defined, repeatable tasks, with configurations that are versioned, reviewed, and owned by the team.

- All AI-assisted work undergoes human-in-the-loop review, utilizing structured criteria for correctness, maintainability, security, and risk. This approach allows us to move faster with AI while preserving the engineering judgment and responsibility our clients depend on.

Pillar #2: AI Standard Fundamentals

Without a shared understanding of AI fundamentals, teams make inconsistent decisions. To ensure consistency and prevent mistakes, we enforce a shared baseline of AI competency. That's why every Ballast Lane engineer, frontend, backend, mobile, DevOps, and QA, must earn one of the following certifications within six weeks after an onboarding training is done and relevant material is made available for all of them:

This certification ensures Ballast Lane engineers understand:

- AI capabilities and limitations: When to use LLMs, traditional ML, or deterministic systems

- Responsible and secure AI usage: Data privacy, compliance, bias mitigation, and architectural safeguards

- Cost, risk, and quality tradeoffs: Token economics, latency considerations, RAG vs. fine-tuning decisions, and observability

For team members who have already earned one of these certifications, we review their development plans individually and support them in pursuing advanced certifications or alternatives focused on AI building best practices.

Pillar #3: AI Building Training

In this pillar, AI moves from awareness and assisted usage into real engineering capability. Certifications and AI-enabled workflows are important, but neither alone is sufficient to design, build, and operate production-grade AI systems. Modern solutions require experience with retrieval-augmented generation pipelines, multi-agent architectures, fine-tuned models, and the platforms that support them in real-world environments. Rather than outsourcing this capability, we have made a deliberate decision to build it internally and embed it into how our teams deliver software.

We do this through a structured, hands-on program that trains our engineers by applying modern AI technologies directly to real product features and delivery scenarios. This includes working with:

- Cloud AI platforms

- Agent orchestration frameworks, vector databases

- AI observability and performance-optimized inference

Always under real constraints of scale, security, and maintainability.

From this program, we develop a focused cohort of approximately 30 AI Subject Matter Experts. These SMEs elevate the organization by leading AI-driven client engagements, mentoring peers, documenting best practices, and continuously improving our standards, tools, and architectural patterns. The result is not experimentation for its own sake, but a durable internal capability to design, deploy, and scale AI-powered systems that meet enterprise expectations and deliver long-term value.

How Do the Three Pillars Work Together?

These pillars reinforce one another in a continuous improvement loop:

- Certification establishes a shared understanding.

- Standardized workflows enable daily application.

- Advanced training produces internal experts.

- Experts improve tooling and practices.

- Improved tooling makes certification more relevant.

Our guiding principle is simple: AI should help engineers do their best work; it does not replace them. We reject shortcuts that trade long-term reliability for short-term speed. Instead, we focus on disciplined engineering, responsible AI use, and continuous learning. This is how we build secure, maintainable software that lasts.